I'm still thinking about the Sony A7S. I'm not sure why, but I am. Er, well, yes, I do know why I'm still thinking about it. Something is Grinding my Gears.

Michael Reichmann wrote some years ago about how the A7S files "felt" similar in quality to the Kodak CCD medium format sensor output. I couldn't help but notice he didn't say the same thing about the A7 nor the A7R sensors. Both cameras had been on the market a year before the A7S was introduced.

The 24mpixel A7 camera produces even now beautiful images for me. What could be better? Well... maybe there was a years worth of developmental "baking" of more quality into the A7S over the earlier sensors? Or, as was the thought at the time, the 8,4micron photo site size the Source of Fat Pixel Goodness?

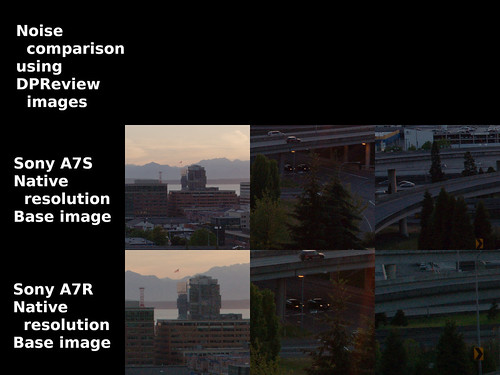

I read the DPReview review of the A7S, found and downloaded two ARW (RAW) samples. I thought it might be interesting to see how they behaved when subjected to my standard image processing using Rawtherapee and the Gimp. It feels like there might be an opportunity to learn something using someone else's comparison images.

Setup~

- Sony A7S and A7R images downloaded from DPReview

- Rawtherapee

- Adjust Lightness +30

- Adjust Curves by raising the middle

- Keep the images at their out of camera native resolutions (ie: no resizing)

NOTE: the DPReview images were 1.7 and 2EV underexposed and filed under the heading of "Dynamic Range in the real world." They were trying to share something they "saw" regarding noise control and dynamic range. So I needed to do what they did, raise the shadows to the point the overall image looked somewhat OK, then consider the noise and dynamic range, particularly in the dark amplified areas. Where DPReview used Lightroom's Exposure Value slider, I used Rawtherapee's Lightness so as to avoid blowing out the highlights.

Comparison ~

DPReviews base image

with my processing applied

Comments ~

The rather heavy Rawtherapee processing was needed to bring up the shadows of the two under-exposed images borrowed from DPReview. These images are trying to stress the image capture system by amplifying (expanding) the dark areas so that we can evaluate things like dynamic range and sensor noise (particularly in the expanded/raised shadow areas). Most of the time many of us would never shoot a photograph in the real world in this way, and as we will see in future posts, I have questions about the validity of this as a test method.

The 100ISO base image at native 4246x2840 pixel resolution Sony A7S image looks smooth in the light areas, and slightly noisy in the deep and now raised shadow areas. This is pretty remarkable to me, particularly when I look at the base uncorrected image and compare it to the lightness and curves processed result..

To my eyes the A7S has lower noise than the 7354x4912pixel A7R image.

We were led to expect this, right? Seems intellectually correct. Lower pixel density sensors have lower noise than sensors where the photosites are packed in like sardines, right?

So what's my Gear Grinding problem? Well, here it is. DPReview wrote "... Who wins? In a nutshell? a7R, hands down..."

Please tell me my eyes are really bad, or tell me that we see the same thing. I don't see where the A7R image "wins" in any dimension except size (har!).

The Gear Grinding problem is partially explained in the next sentence. "...we've downscaled [emphasis mine] the a7R image to the a7S' 12MP resolution, the a7R offers more detail and cleaner shadow/midtone imagery compared to the a7S. Downscaling the a7R image also appears to have the added benefit of making any noise present look more fine grained; the a7S' noise looks coarse in comparison..."

This is where they have me really and honestly Gear Grindingly stumped. If you're trying to compare image qualities between different sized sensors, why on Gawds Green Terra Firma would someone want to downsize a larger image to the smaller sensor dimensions?

I'm serious. Why? What does it show? What does it prove? How would it be relevant to photography except when someone is willing to throw away potential resolution to, what?, prove an Obvious Point?

The Obvious Point being that something called "pixel binning" or downsizing works. Noise across an image will be averaged out (or reduced). With that will come more accurate colors (averaging out the chromatic variations brought, in part, by subtle noise, even at low ISOs).

What if, instead, reviewers were to take a 4240 pixel long section out of the A7R and compare directly like size image to like size image? Adjust for image field by correctly selecting the focal length, of course. Wouldn't that make for a more honest evaluation, if what you're trying to evaluate were things like dynamic range, color depth, resolution, and noise?

And speaking of resolution, the A7R reportedly comes without an AA filter and is supposed to be sharper than those that come with AA filters (such as the A7 or A7S). And yet, the A7S appears to deliver resolution as well as the A7R. Here is Yet Another Wabbit Hole to fall down, but we'll save this for another time.

In the end the DPReview provided AWR RAW sample A7S image looks to me to be smoother and sharper than the A7R _before_ I apply any noise reduction or capture sharpening. I'll make those adjustments in part Two of this series so we can begin to see what the differences might be as we start processing an image.

For the moment,

however, can someone explain to me like I'm in kindergarten how downsizing an A7R image to A7S dimensions gives us a valid departure point for understanding relative image quality?

No comments:

Post a Comment